AI policy has become industrial policy, export control, and alliance management at once. Since 2020, Washington and Beijing have converged on a blunt insight: whoever writes the rules for models, chips, and data flows sets the pecking order. Both are building regulatory perimeters that do more than “keep us safe.” They decide who gets compute, who trains frontier systems, who sells across borders, and who is cut off when it counts. The result is a world splintering into partly incompatible rule-zones atop the hard chokepoints of lithography, memory, and cloud. The AI 2027 scenario frames the stakes: in this mid-decade window, governance of compute, model distribution, and cross-border data can matter as much as scientific progress. Rules are turning into rails, and the network is being rewired.

Three Regulatory Paradigms: The American Chokepoint, the Chinese Filings State, and Europe’s Referee

Washington’s core paradigm since October 2022 has been to weaponize chokepoints. The Commerce Department’s Bureau of Industry and Security (BIS) rolled out sweeping “advanced computing” rules that cut China off from high-end GPUs and much semiconductor equipment, and then tightened and clarified those controls in 2023 and 2024. In January 2025, Commerce went further and, for the first time, put export controls on AI model weights—an explicit recognition that in a frontier-model world, weights can be as strategically sensitive as wafers. The pipeline of complementary measures includes a still-pending “know-your-cloud-customer” regime for US Infrastructure as a Service (IaaS) providers, which would require providers to report when foreign customers are training large AI models, and would give the US government power to block access to cloud services for certain countries or companies seen as a security risk. The logic is clear: starve adversaries of compute, visibility, and proprietary parameters.

Beijing’s paradigm is different. Instead of relying only on broad safety rules, China requires every public-facing AI service to be formally registered with regulators, a mandatory filing system that makes providers and their models visible, trackable, and instantly sanctionable. The algorithm recommendation provisions (2022) and deep-synthesis rules (effective January 2023) set the first rules requiring companies to explain how their algorithms work, mark AI-generated content so its origin is clear, and attach watermarks so it can be traced. The 2023 Generative AI Measures created the scaffolding for safety testing, security reviews, and responsibility for providers. This year China added a synthetic-content labeling rule and, crucially, has operationalized the filings system: hundreds of generative AI services now register with the Cyberspace Administration, a compliance lever that can tighten overnight. This is regulation as operational command and visibility at scale.

Europe, for its part, has installed a referee. The AI Act entered into force in 2024, with outright bans on the riskiest AI practices and duties to educate citizens about AI starting February 2nd, 2025, obligations for general-purpose models like ChatGPT or Gemini from August 2nd, 2025, and strict compliance rules for high-risk uses such as credit scoring, hiring, or law enforcement phasing in through 2026–2027. A Commission-endorsed Code of Practice for GPAI now gives providers a documented pathway on transparency, copyright, and systemic-risk controls. Brussels is not trying to build semiconductor fabrication plants or cloud chokepoints; it is trying to set the audit playbook the rest of the world will be asked to follow.

The New Gates: Compute, Clouds, Weights, and Access

What has changed since 2020 is not just that controls exist, but that they now target the entire stack. On the hardware side, the Commerce Department’s BIS issued rules from 2022 to 2024 that blocked China and other targets from accessing the most powerful GPUs and supercomputers. In 2023 the agency fine-tuned the performance thresholds and expanded the list of covered countries, and in 2024 it made further adjustments to close loopholes. On sovereign data, Washington has proposed rules to curb access by “countries of concern” to sensitive US personal and government-related data, while Commerce’s IaaS proposal would force Know Your Customer (KYC) compliance for cloud customers and reporting of certain foreign model-training events. That combination turns the cloud into a governed border.

Two 2025 developments underline how far the paradigm has moved. First, Commerce’s interim controls on model weights acknowledge that parameters themselves are dual-use objects that can be exfiltrated, finetuned, and weaponized. Second, the private sector has begun to internalize the chokepoint logic. Anthropic’s decision on September 4th to block companies majority-owned or controlled by Chinese entities worldwide from accessing Claude models is the most visible example of a US lab voluntarily matching its access rules to US geopolitical boundaries, closing the subsidiary and reseller loopholes that had persisted. Corporate policy is now an extension of statecraft.

The Nvidia “H20 saga” shows how fluid and political these gates can be. After tightened restrictions in April, licenses and policy shifts in July and August signaled a partial reopening for a China-specific, down-binned GPU, while Chinese buyers oscillated between eager demand and security-minded caution. Whatever the transient licensing posture, the broader picture is consistent: the United States is learning to meter compute exports, and China is learning to substitute, hoard, and route around. Neither side intends to return to the pre-2022 world.

China’s Rulebook: Safety by Filing, Sovereignty by Integration

China’s domestic governance since 2022 has three macro-traits that matter for global competition.

First, the filings state. By mid-2025, hundreds of generative services had received the Cyberspace Administration of China (CAC) filings. Filing is not a rubber stamp; it is a live lever. It makes the model landscape enumerable for regulators and instantly sanctionable for rule changes. For a state worried about deepfakes, social stability, and platform power, filings are surveillance and safety rolled together.

Second, the labeling turn. Deep-synthesis rules already mandated that AI-generated media carry provenance information—disclosures about when, how, and with what system the content was created—and watermarking, or embedded digital markers that signal a piece of content was machine-generated. This year’s synthetic-content labeling measures add a more explicit framework on marking machine-made content. Coupled with the Generative AI Measures’ content standards and security requirements, the labeling suite is about keeping the distribution layer governable. Beijing’s calculus is straightforward: if it cannot fully control model internals, it will control outputs and circulation.

Third, integration as strategy. The State Council’s “AI Plus” guideline—foreshadowed in the 2024 Government Work Report and formalized in August 2025—directs AI into production, services, governance, and public welfare, and promises standards, pilots, and compute support. Municipal governments are already subsidizing compute “vouchers” so SMEs can rent training time in underutilized data centers. The aim is to convert breadth of application into depth of capability, and to do it under policy control.

Beijing has, in parallel, eased a notorious friction point for foreign and joint-venture firms: cross-border data. The CAC’s March 22, 2024 provisions loosened the rules by raising the volume of data that triggers a security review, carving out exemptions for common cross-border transfers like HR or e-commerce data, lengthening how long a passed assessment remains valid, and letting free-trade zones test their own “negative lists” of data types that still require approval. Financial-sector guidelines followed in April of 2024. It is a calibration: tighten AI content and model safety domestically, but lower the cost of legitimate data exports to keep manufacturing and services hooked into global value chains.

China is building a system in which speech and synthesis are tightly managed, filings make models and providers legible, and industrial policies pull AI into every sector. The outcome is not just national champions; it is an AI-enabled administrative state with real-time levers over content, models, and computation.

America’s Rulebook: Dominance Through Denial, and a Swing Back to Deregulation at Home

On the US side, there has been a decisive external turn and a contested internal one. Externally, BIS continues to update export controls on advanced computing items and semiconductor manufacturing equipment, with 2023 clarifications and 2024 corrections. Treasury’s outbound investment program and separate data-security proposals add financial and information layers to the perimeter. Internally, the shift from the 2023 Biden Executive Order on AI to the January 23rd, 2025 Executive Order 14179, “Removing Barriers to American Leadership in AI,” signals a re-centering of innovation and industrial build-out over precaution. The Office of Management and Budget’s April guidance to agencies under the new Executive Order emphasizes accelerating federal use of AI while keeping civil-rights guardrails. The net effect is not laissez-faire; it is a re-balance where the most stringent measures are aimed outward, and the domestic posture leans into speed.

Two additional US instruments are worth flagging as harbingers. First, the infrastructure-as-a-service rulemaking—if finalized in its proposed form—would push Know Your Customer (KYC) and training-run reporting into the business model of American cloud providers, effectively deputizing them as border agents in the compute supply chain. Second, the model-weights controls will force labs to treat parameter artifacts as controlled items with licensing and chain-of-custody obligations. Combined, these moves turn what used to be open, fungible resources into governed assets.

It’s clear that it is not about over-regulation; it is about the stability of strategy. If the pendulum swings sharply with each administration on domestic guardrails and procurement rules, Washington may remain formidable abroad while staying erratic at home. That inconsistency complicates alliance coordination and creates compliance whiplash for industry.

Europe’s Play: Procedural Power and the Long Arm of Compliance

Europe lacks Nvidia’s semiconductor fabrication plants or ASML’s political autonomy on exports, but it does wield regulatory gravity. The AI Act’s staged application is now in force: bans and literacy since February 2nd, 2025; general-purpose model obligations since August 2nd, 2025; high-risk obligations through 2026–2027. The Commission’s Code of Practice for General Purpose AI (GPAI) gives providers a template on transparency documentation, copyright policies, and systemic-risk practices, with draft guidance on core concepts published over the summer. For global providers, “EU-first” compliance becomes the default configuration that then propagates elsewhere.

Brussels is also learning to export supervision. The new AI Office, national market-surveillance authorities, and a growing library of Q&A and guidelines mean that “how to audit a frontier model” is increasingly defined on EU terms. By refusing calls to delay the timeline for issuing these guidelines, the Commission has chosen to lock in that procedural power even as US domestic policy shifts and China races to industrialize AI. The most important fact about the EU’s approach may be its endurance, and that third-country firms will be forced into two overlapping compliance regimes: EU auditability and US exportability. For many, that is a pincer.

The Access Wars: When Corporate Policy Mirrors Geopolitics

Private governance is now converging with national policy. Anthropic’s abrupt global bar on companies majority-owned or controlled by Chinese entities closes the “Singapore subsidiary” loophole used by some to route around geography-based access limits. Chinese model providers responded within hours by courting affected customers. Even if enforcement details remain murky, the message to corporate counsels is clear: major US labs are willing to harden their perimeter in line with Washington’s security frame. Expect similar moves from other providers as EU GPAI obligations bite and as US model-weights controls propagate into contractual terms.

Meanwhile, China is institutionalizing domestic substitutes and industrial demand. The State Council’s AI Plus plan, combined with compute vouchers and deep-synthesis labeling, reduces dependence on Western APIs while making domestic models defaults in public services and priority industries. Access is becoming ideological and infrastructural, not just commercial.

International processes have not stood still. The G7 Hiroshima Process has yielded guiding principles and a draft code of conduct for advanced AI developers, with the OECD piloting reporting frameworks. The UK’s AI Safety (now Security) Institute has built out evaluation work and released the International AI Safety Report in January. The UN General Assembly adopted a first-ever consensus AI resolution in March 2024. These are valuable, but they remain soft law. The hard law that determines who trains, sells, and deploys at scale is still being written in Washington, Beijing, and Brussels.

Multilateralism will not harmonize the two dominant paradigms in time to avoid fragmentation. Soft-law commitments on transparency and evaluation cannot stop a licensing officer’s “no,” a CAC filing suspension, or a Commission demand for documentation under Article 53. The architecture of power is national; the vocabulary of virtue is multilateral.

Cross-Border Data: The Regulatory Frontier

Cross border data has become the new regulatory frontier, where rules on transfer and access increasingly decide who can train, sell, and integrate AI systems. China’s 2024 provisions eased cross-border data transfer requirements, extended assessment validity, clarified exemptions, and allowed free-trade zones to use negative lists. In April 2025, financial data rules added sector-specific clarity. This lowers friction for foreign firms and keeps Chinese firms integrated with global customers and suppliers even as model policy tightens. It is a shrewd balance: promise openness at the border while maintaining control inside the fence.

On the US side, a January 2025 proposal would limit access by countries of concern to sensitive US personal and government-related data through brokerage and vendor channels, while the IaaS rule would force cloud intermediaries to surface who is training on what and where. If finalized as proposed, these measures would narrow legal pathways for data and compute arbitrage, shifting the economics of model training outside the US perimeter.

The bleak reading is that compliance for cross-border AI collaboration will soon require tri-jurisdictional mastery: EU auditability for products, US export and data controls for supply chains, and Chinese filings and labeling for market access. Many firms will simply opt out, shrinking the commons where international science once flowed.

What “Bleak” Looks Like by 2027: A Probable Trajectory

If present trends hold, the AI economy will soon run on two partially incompatible operating systems.

In the US sphere, access to frontier compute, leading models, and model weights will be mediated by license and contract, with cloud providers performing de-facto border control. Exportability, not just profitability, will determine product strategy. US labs will increasingly embed geopolitical eligibility into their terms of service, and the line between a policy announcement and a customer cutoff will get shorter. The H20 and Anthropic episodes are previews.

In the Chinese sphere, a nationalized application drive will put models everywhere while filings, watermarking, and labeling keep content governable. Compute vouchers will backfill underused capacity; looser cross-border data rules will let Chinese manufacturers and financial firms keep moving information abroad, helping them stay plugged into global supply chains and avoid the economic shocks of full decoupling. Domestic providers will gain share as US APIs harden their perimeters. The price of participation will be compliance with a ruleset that fuses model safety with political security.

Europe will act as referee and gatekeeper for anyone selling into its market. By 2026–2027, high-risk obligations and GPAI enforcement will convert “best practice” into “documented practice,” complete with fines that bite. Providers will ship EU-ready documentation, interfaces for content provenance, and pre-canned safety cases, then reuse those artifacts in other jurisdictions by default.

The world in that configuration is more expensive, slower to interoperate, and less forgiving. It is also more brittle. When policy shifts or licensing backlogs hit, supply chains stall, and models go dark for entire customer segments. Talent flows become politicized, and the next generation of benchmarks and evaluation data risks splintering. That is the bleakness: not a catastrophe, but a steady loss of the shared substrate on which open science thrives.

Policy Implications: Choosing Where to Bend and Where to Hold

A few implications follow for governments and firms navigating this environment.

First, export controls and access policies work best when they are legible. The US model-weights controls and cloud KYC proposal are steps toward clarity. To avoid whiplash, Washington should lock in a bipartisan floor on outward-facing measures and insulate enforcement from electoral oscillation. The goal is to make denial predictable enough that allies can plan around it, and adversaries cannot exploit seams.

Second, Europe’s procedural power could become a stabilizer rather than a barrier if it continues to publish high-quality guidance, keeps the GPAI Code of Practice interoperable with US safety norms, and leans into technical standards on provenance and evaluation. The more the EU’s demands overlap with what labs already do for US safety cases and UK evaluations, the less fragmentation at the interface.

Third, China’s filings-and-labeling model will not disappear. Companies that need the China market will need dual operating modes: one set of content and disclosure controls for Chinese platforms, another for EU and US jurisdictions. Where that is impossible, some will exit. Policymakers should assume that divergence is durable and craft trade, research, and investment policy with that in mind.

Finally, multilateral work remains worth doing even if it does not reconcile the blocs. The Hiroshima Process and OECD pilots create a lingua franca for safety reporting that firms can reuse across jurisdictions. The UN resolution, however soft, legitimizes capacity-building for countries otherwise shut out of the AI economy. Those moves will not erase export controls or filing regimes, but they can lower the cost of compliance for everyone else.

Governing the Stack We Built

The competition between American and Chinese AI rulebooks is not a sprint for a single breakthrough; it is a contest to govern a stack—chips, clouds, weights, content, and data—that now expresses power. The rules are already hardening at each layer. The United States is perfecting denial where it holds leverage, and outsourcing enforcement to clouds and labs. China is perfecting control where it can assert it, and loosening data rules where it must. Europe is perfecting procedure where others have less patience, and exporting it through market access.

None of this is neutral. It is a re-platforming of the global economy around new iron curtains, softly drawn but no less real. For policymakers, the task is to choose which walls to build thick, which doors to keep open, and how to For policymakers, the task is to choose which walls must be solid, which doors should stay open, and how to keep a pile of small restrictions from adding up to an outright blockade. For firms, the choice is simpler and harsher: learn to ship across three systems, or choose one and live with the walls.

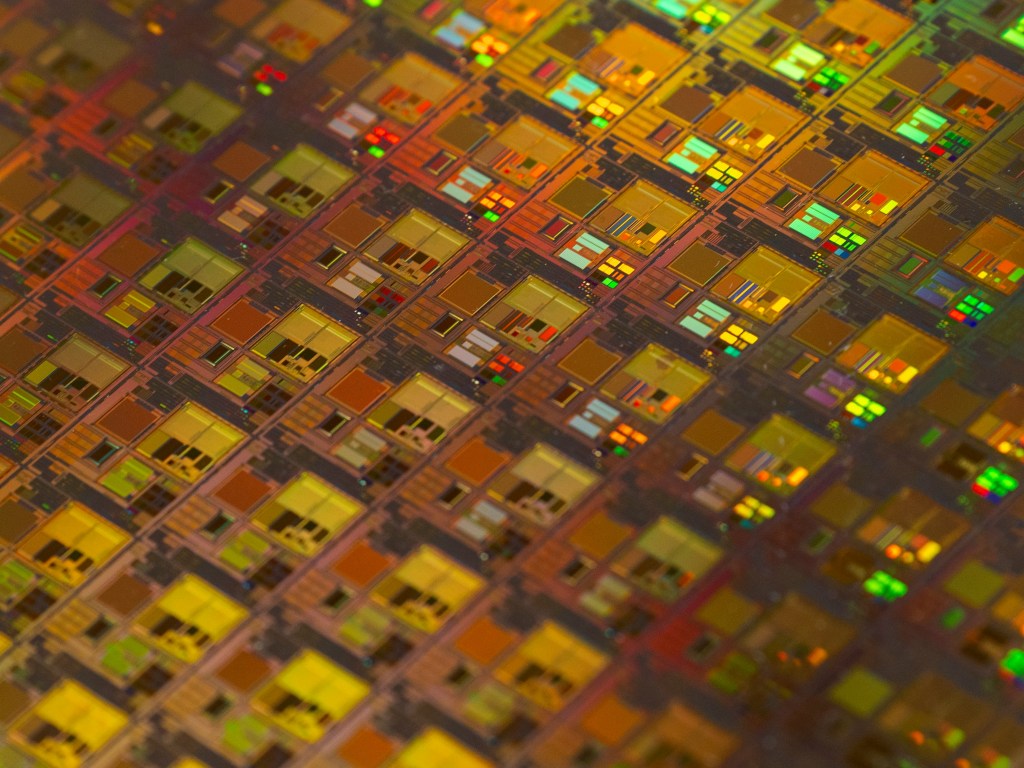

Image credits: Maxence Pira via Unsplash

Xiaolong (James) Wang

James is a Master of Science in Foreign Service student at Georgetown University specializing in AI and digital governance. Born in China and raised in Singapore, he completed his bachelor degree in Canada. James has worked with the G20 Research Group and the NATO Association of Canada, focusing on global governance, security policy, and multilateral diplomacy.